Simulated EEG-Driven Audio Information Mapping Using Inner Hair-Cell Model and Spiking Neural Network

Pasquale Mainolfi

- Format: oral

- Session: papers-3

- Paper PDF link

- Presence: remote

- Duration: 15

- Type: long

Abstract:

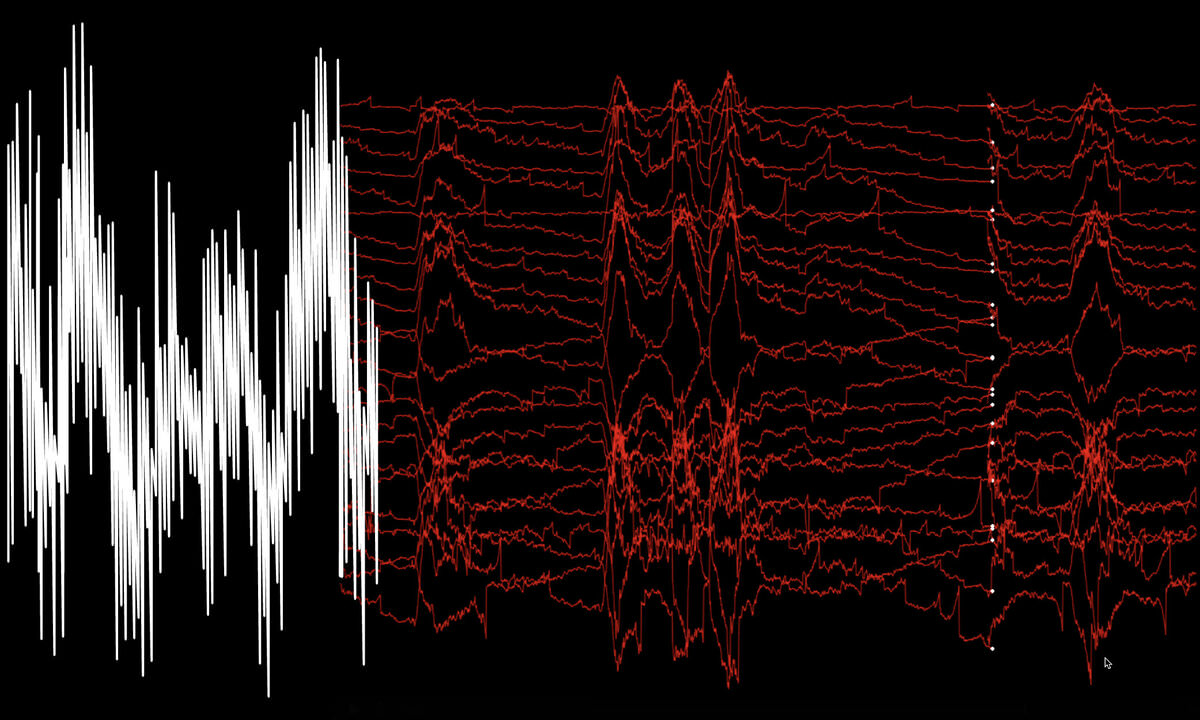

This study presents a framework for mapping audio information into simulated neural signals and dynamic control maps. The system is based on a biologically-inspired architecture that traces the sound pathway from the cochlea to the auditory cortex. The system transforms acoustic features into neural representations by integrating Meddis’s Inner Hair-Cell (IHC) model with spiking neural networks (SNN). The mapping process occurs in three phases: the IHC model converts sound waves into neural impulses, simulating hair cell mechano-electrical transduction. These impulses are then encoded into spatio-temporal patterns through an Izhikevich-based neural network, where spike-timing-dependent plasticity (STDP) mechanisms enable the emergence of activation structures reflecting the acoustic information’s complexity. Finally, these patterns are mapped into both EEG-like signals and continuous control maps for real-time interactive performance control. This approach bridges neural dynamics and signal processing, offering a new paradigm for sound information representation. The generated control maps provide a natural interface between acoustic and parametric domains, enabling applications from generative sound design to adaptive performance control, where neuromorphological sound translation explores new forms of audio-driven interaction.