Gesture-Driven DDSP Synthesis for Digitizing the Chinese Erhu

Wenqi WU; Hanyu QU

- Format: oral

- Session: papers-5

- Paper PDF link

- Presence: in person

- Duration: 10

- Type: medium

Abstract:

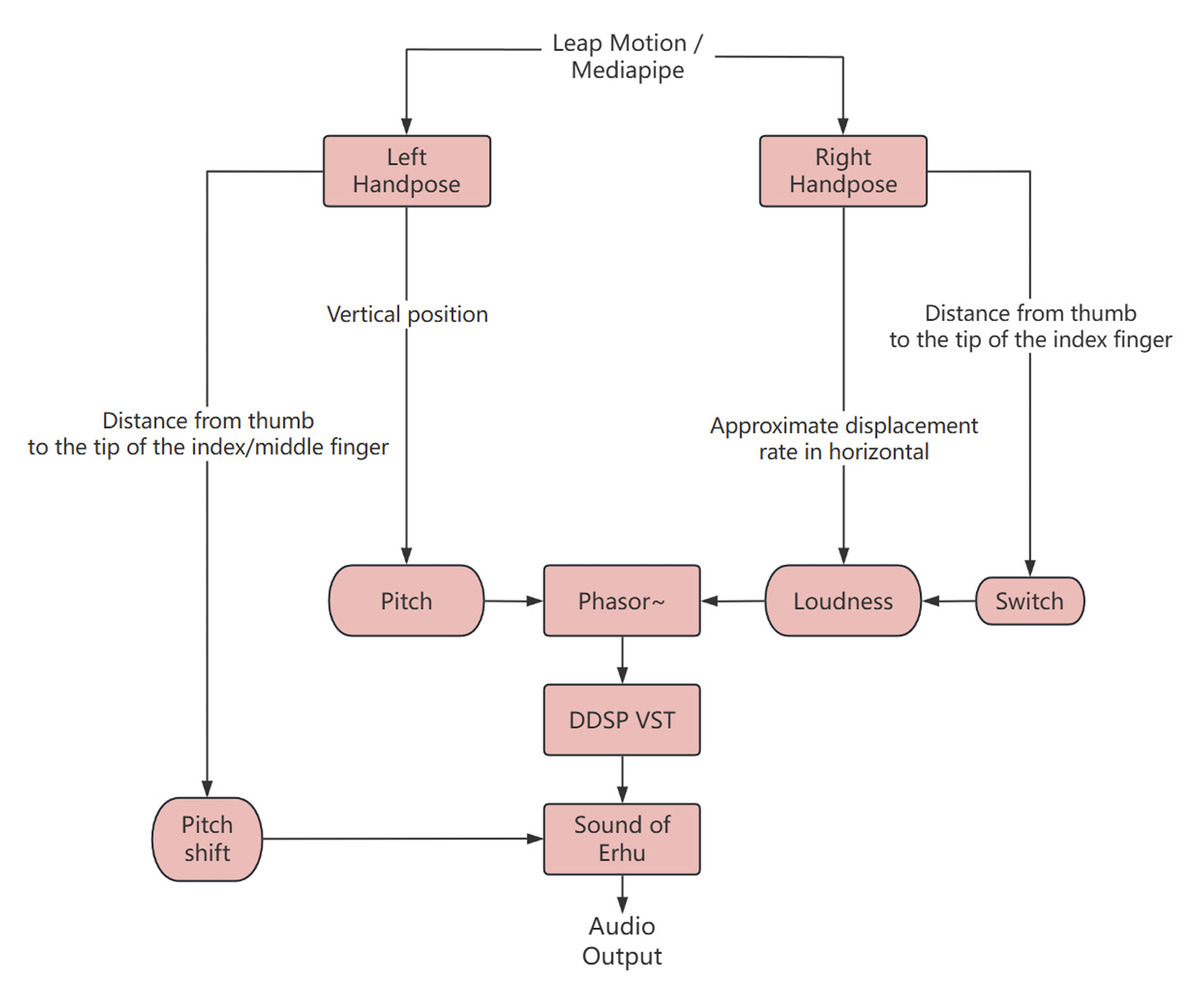

This paper presents a gesture-controlled digital Erhu system that merges traditional Chinese instrumental techniques with contemporary machine learning and interactive technologies. By leveraging the Erhu’s expressive techniques, we develop a dual-hand spatial interaction framework using real-time gesture tracking. Hand movement data is mapped to sound synthesis parameters to control pitch, timbre, and dynamics, while a differentiable digital signal processing (DDSP) model, trained on a custom Erhu dataset, transforms basic waveforms into authentic timbre which remians sincere to the instrument’s nuanced articulations. The system bridges traditional musical aesthetics with digital interactivity, emulating Erhu bowing dynamics and expressive techniques through embodied interaction. The study contributes a novel framework for digitizing Erhu performance practices, explores methods to align culturally informed gestures with DDSP-based synthesis, and offers insights into preserving traditional instruments within digital music interfaces.