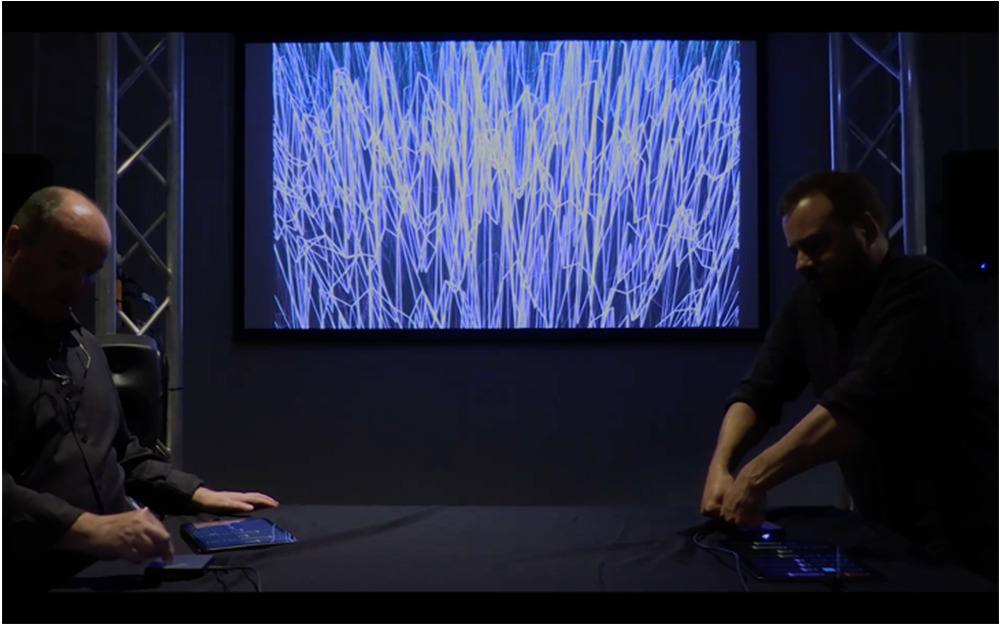

MSN/AV (Maximum Silence to Noise/Audio-Visual)

John Ferguson; Andrew Brown

- Format: Live Performance

- Session: concert-5

- Paper PDF link

- Presence: In person

- Duration: 7

Abstract:

MSN/AV (Maximum Silence to Noise/Audio-Visual) is an interactive audiovisual system performed by two musicians. The sound world is created through ring modulation synthesis controlled by multi-dimensional touch gestures. This approach provides a rich diversity of sonic potential whilst maintaining clear remnants of physical gestures. Each musician uses a ROLI Lightpad Block and an iPad running TouchOSC to control bespoke software written in Pure data. Interaction data from each performer is passed to a Touch Designer network which generates and/or manipulates visual materials. MSN/AV celebrates physical interplay with gestural interfaces and situates live improvisation within a responsive audiovisual environment. Sensor data is used to generate sound and graphics in real-time, aiming for an audiovisual entanglement that provides sonic and visual connections ranging from the gentle steering of musical improvisation to more autonomous and potentially disruptive behaviour. At the heart of MSN/AV is a novel implementation of ring modulation that features two identical oscillators. These oscillators morph from silence to white noise, passing through sine and square waveforms along the way. This morphing capability enables a smooth transition from simple to complex audio spectra.

MSN Synthesis The term ‘maximum silence’ refers to the absence of sound in a ring modulator when either modulating oscillator is silent. To maintain modulation independent of the amplitude envelope, a DC offset ‘silence’ signal is introduced, ensuring consistent gain as the modulating oscillator’s amplitude decreases. A ROLI Block serves as the primary gestural controller for MSN/AV. Its touchpad maps silence-to-noise transitions on the x-axis and oscillator pitch on the y-axis, while pressure controls the amplitude envelope. Two oscillators, each controlled by a finger on the multi-touch pad, are ring modulated, offering six dimensions of control for expressive sound shaping. An iPad running TouchOSC provides on-screen sliders and buttons to control audio effects and, when applicable, visual parameters. These include volume, panning automation, delay, and reverb.

Research Question(s) The goal is to explore gestural control of sound and vision while creating new artistic work that engages with rich audio spectra and the entanglement within audiovisual practices. This expressiveness in gesturally controlled ring modulation synthesis builds on a history of ‘noisy’ interactive electronic instruments like the Crackle Box , BoardWeevil, and Atari Punk Console, which use ring modulation or similar techniques to produce dynamic inharmonic spectra. While ‘live visuals’ are now common in contemporary performance, the role of the musical score is evolving. Yet, aside from a few notable exceptions (Louise Harris, Myriam Bleau, Vicki Bennett, Nonotak Studio), there is little artistic research on improvising musicians in responsive audiovisual environments—an area MSN/AV seeks to explore.

- Our primary research questions are:

- To what extent can improvised performance with interactive technology be successfully situated in a responsive audio-visual environment?

- What creative opportunities emerge when ‘musical improvisation and ‘live visuals’ combine in an environment where the line between cause and effect is blurred?

Audiovisual Context A useful starting point is visual music: Oskar Fischinger’s (1938) An Optical Poem is an early example that William Moritz (1986) has used to suggest that ‘[s]ince ancient times, artists have longed to create with moving lights a music for the eye comparable to the effects of sound for the ear.’ [4] However, many early exemplars work with fixed musical and visual resources, whereas MSN/AV focuses on improvised live performance in interactive environments. The real genesis might therefore be a work like John Cage’s Variations V (1965), which was created in collaboration with the Merce Cunningham Dance Company and made use of shadows cast on walls to trigger sound via light sensors. This resonates with what Simon Waters (2013) has termed ‘Touching at a Distance’ [5] and might also be considered via John Whitney’s (1994) notion of ‘audio-visual complementarity’. [6] These are all useful historical ideas that have helped develop this project. More recently, Cat Hope and Lindsay Vickery (2010) have furthered discussion of ‘The Aesthetics of the Screen-Score’ [3] and it is clear their Decibel ensemble is a leader in this field. Similarly, Louise Harris (2021) has examined the nature of audio-visual experience [2] with a particular emphasis on media hierarchy; our work builds upon her 2016 article ‘Audiovisual Coherence and Physical Presence’ [1], which emphasises the relationship between audio-visual media and physical (human) presence.